Sat 10 October 2015

With the recent announcement that AWS Lambda now supports Python, I decided to take a look at using it for geospatial data processing.

Previously, I had built queue-based systems with Celery that allow you to run discrete processing tasks in parallel on AWS infrastructure. Just start up as many workers on EC2 instances as you need, set up a broker and a results store, add jobs to the queue and collect the results. The problem with this system is that you have to manage all of the infrastructure and services yourself.

Ideally you wouldn't need to worry about infrastructure at all. That is the promise of AWS Lambda. Lambda can respond to events, fire up a worker and run the task without you needing to worry about provisioning a server. This is especially nice for sporadic work loads in response to events like user-uploaded data where you need to scale up or down regularly.

The reality of AWS Lambda is that you do need to worry about infrastructure in a different way. The constraints of the runtime environment mean that you need to get creative if you're doing anything beyond the basics. If your task relies on compiled code, either Python C extensions or shared libraries, you have to jump through some hoops. And for any geo data processing you are going to use a good amount of compiled code to call into C libs (see numpy, rasterio, GDAL, geopandas, Fiona, and so on)

This article describes my approach to solving the problem of running Python with calls to native code on AWS Lambda.

Outline

The short version goes like this:

- Start an EC2 instance using the official Amazon Linux AMI (based on Red Hat Enterprise Linux)

- On the EC2 insance, Build any shared libries from source.

- Create a virtualenv with all your python dependecies.

- Write a python handler function to respond to events and interact with other parts of AWS (e.g. fetch data from S3)

- Write a python worker, as a command line interface, to process the data

- Bundle the virtualenv, your code and the binary libs into a zip file

- Publish the zip file to AWS Lambda

The deployment process is a bit clunky but the benefit is that, once it works, you don't have any servers to manage! A fair tradeoff IMO.

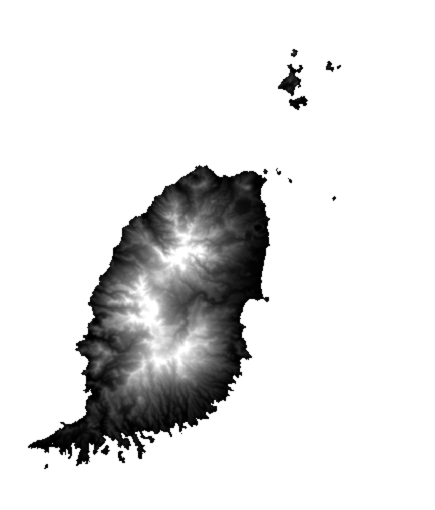

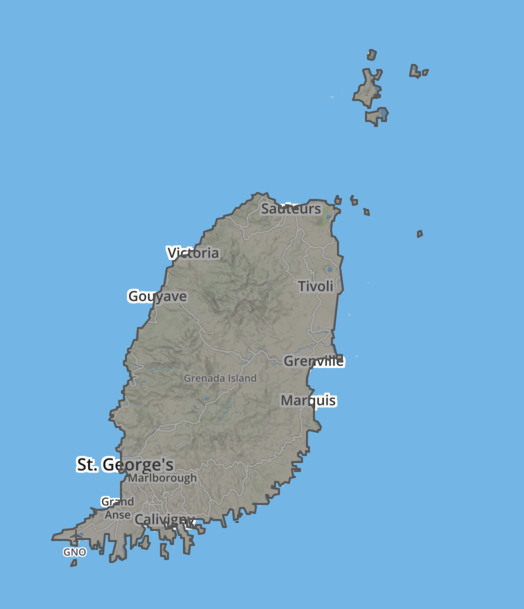

The process will take a raster dataset uploaded to the input s3 bucket

and automatically extract the shape of the valid data region, placing the resulting GeoJSON in the output s3 bucket.

Start EC2

Under the hood, your Lambda functions are running on EC2 with Amazon Linux. You don't have to think about that at runtime but, if you're calling native compiled code, it needs to be compiled on a similar OS. Theoretically you could do this with your own version of RHEL or CentOS but to be safe it's easier to use the official Amazon Linux since we know that's the exact environment our code will be run in.

I'm not going to go over the details of setting up EC2 so I'll assume we already have our account set up. The AMI ids are listed here, pick the appropriate one for your region

aws ec2 run-instances --image-id ami-9ff7e8af \

--count 1 --instance-type t2.micro \

--key-name your-key --security-groups your-sg

And ssh in

ssh -i your-key.pem ec2-user@your.public.ip

Make sure everything's up to date:

sudo yum -y update

sudo yum -y upgrade

Build shared libraries from source

Because your Lambda function will run in a clean AWS linux environment, you can't assume any system libraries will be there. Compiling from source isn't the only option - you could install binaries from the Enterprise Linux GIS effort but those tend to be older versions. To get more recent libs, compiling from source is an effective approach.

First install some compile-time deps

sudo yum install python27-devel python27-pip gcc libjpeg-devel zlib-devel gcc-c++

Then build and install proj4 to a local prefix

wget https://github.com/OSGeo/proj.4/archive/4.9.2.tar.gz

tar -zvxf 4.9.2.tar.gz

cd proj.4-4.9.2/

./configure --prefix=/home/ec2-user/lambda/local

make

make install

And build GDAL, statically linking proj4

wget http://download.osgeo.org/gdal/1.11.3/gdal-1.11.3.tar.gz

tar -xzvf gdal-1.11.3.tar.gz

cd gdal-1.11.3

./configure --prefix=/home/ec2-user/lambda/local \

--with-geos=/home/ec2-user/lambda/local/bin/geos-config \

--with-static-proj4=/home/ec2-user/lambda/local

make

make install

This should leave us with a nice shared library at /home/ec2_user/lambda/local/lib/libgdal.so.1 that can be safely

moved to another AWS Linux box.

Create a virtualenv

Pretty straighforward but keep in mind that some of the dependecies here are compiled extensions so these builds are platform-specific - which is why we need to build it on the target Amazon Linux OS.

virtualenv env

source env/bin/activate

export GDAL_CONFIG=/home/ec2-user/lambda/local/bin/gdal-config

pip install rasterio

Python handler function

The handler's job is to respond to the event (e.g. a new file created in an S3 bucket), perform any amazon-specific tasks (like

fetching data from s3) and invoke the worker. Importantly, in the context of this article, the handler

must set the LD_LIBRARY_PATH to point to any shared libraries that the worker may need.

import os

import subprocess

import uuid

import boto3

libdir = os.path.join(os.getcwd(), 'local', 'lib')

s3_client = boto3.client('s3')

def handler(event, context):

results = []

for record in event['Records']:

# Find input/output buckets and key names

bucket = record['s3']['bucket']['name']

output_bucket = "{}.geojson".format(bucket)

key = record['s3']['object']['key']

output_key = "{}.geojson".format(key)

# Download the raster locally

download_path = '/tmp/{}{}'.format(uuid.uuid4(), key)

s3_client.download_file(bucket, key, download_path)

# Call the worker, setting the environment variables

command = 'LD_LIBRARY_PATH={} python worker.py "{}"'.format(libdir, download_path)

output_path = subprocess.check_output(command, shell=True)

# Upload the output of the worker to S3

s3_client.upload_file(output_path.strip(), output_bucket, output_key)

results.append(output_path.strip())

return results

It's important that the handler function does not import any modules which require

dynamic linking. For example, you cannot import rasterio in the main python

handler since the dynamic linker doesn't yet know where to look for the GDAL shared library.

Your can control the linker paths using the LD_LIBRARY_PATH environment variable

but only before the process is started. Lambda doesn't give you any control over the environment variables

of the handler function itself. I

tried hacks like creating new processes within the handler using os.execv or multiprocessing pools but the user running the lambda function

doesn't have the necessary permissions to that (both give you OSErrors - [Errno 13] Permission Denied and [Errno 38] Function not implemented respectively).

Fortunately, Lambda lets you call out to the shell so we can just do our real work through a worker script exposed as a command line interface (details in the next section). While at first this feels clunky, it has the side benefit of forcing separation of your AWS code from your business logic which can be written and tested separately.

Worker

The worker script can be written in any language, compiled or interpreted, so long as it follows the basic rules of command line interfaces. We're using Python in the handler to set up the appropriate environment. For this example, the worker will also be written in Python because of it's awesome support for geospatial data processing. But it could be written in Bash or C or just about anything so long as it's runtime environment can be configured with environment variables and arguments.

In this case, the handler is calling worker.py which looks like:

import rasterio

from tempfile import NamedTemporaryFile

import json

import sys

from rasterio import features

def raster_shape(raster_path):

with rasterio.open(raster_path) as src:

# read the first band and create a binary mask

arr = src.read(1)

ndv = src.nodata

binarray = (arr == ndv).astype('uint8')

# extract shapes from raster

shapes = features.shapes(binarray, transform=src.transform)

# create geojson feature collection

fc = {

'type': 'FeatureCollection',

'features': []}

for geom, val in shapes:

if val == 0: # not nodata, i.e. valid data

feature = {

'type': 'Feature',

'properties': {'name': raster_path},

'geometry': geom}

fc['features'].append(feature)

# Write to file

with NamedTemporaryFile(suffix=".geojson", delete=False) as temp:

temp.file.write(json.dumps(fc))

return temp.name

if __name__ == "__main__":

in_path = sys.argv[1]

out_path = raster_shape(in_path)

print(out_path)

Notice how the worker itself has no knowledge of AWS events or S3 - it works entirely on the local filesystem and thus can be used in other contexts and tested much more easily.

Bundle

In order to deploy to Lambda, you need to package it up in a zip file in a slightly unusual manner. All of your Python packages and your handler script should be at the root while the shared libraries can be put in a directory (local/lib in this case)

cd ~/lambda

zip -9 bundle.zip handler.py

zip -r9 bundle.zip worker.py

zip -r9 bundle.zip local/lib/libgdal.so.1

cd $VIRTUAL_ENV/lib/python2.7/site-packages

zip -r9 ~/lambda/bundle.zip *

cd $VIRTUAL_ENV/lib64/python2.7/site-packages

zip -r9 ~/lambda/bundle.zip *

Publish

The details of setting up a Lambda function are far too verbose for this article - I would suggest running through the AWS S3 walkthrough to get the basic S3 example working first. Then use the AWS CLI to update your existing Lambda function:

aws lambda update-function-code \

--function-name testfunc1 \

--zip-file fileb://bundle.zip

The end result

Uploading a raster dataset to your S3 bucket should now trigger the Lambda function which will create a new GeoJSON in the output bucket. All automatically invoked based on the S3 events and completely scalable without having to worry about managing or provisioning servers. Nifty!

The worker and handler code above are intentionally kept short to be more readable. In real usage they would need significantly more error handling and conditionals to handle edge cases, malformed inputs, etc.

It occured to me after writing this that there really is nothing Python-specific about this approach - the handler could just as easily have been written in Javascript and the worker in some other language. But this should provide a general approach for incorporating native code of any sort in AWS Lambda.

It remains to be seen if this approach is faster or cheaper than a queue-based system with autoscaled EC2 instances. If you're doing a constantly-high workload with lots of data, it's probably safe to say that Lambda is not appropriate. If you're doing sporadic workloads with some discrete processing task based on user-uploaded data, Lambda might be the ticket. The primary advantage is not necessarily speed or cost but reduced infrastructure complexity and hands-off autoscaling.